Consensus

Here you can learn how the Narwhal and Mysteciti Consensus mechanism works.

Overview

There are two types of transactions on Sui: simple transactions involving address-owned and transactions immutable objects that can be processed quickly (fast path) and special transactions involving shared objects that need to go through Consensus.

Sui’s object model also allows for more scalability by allowing batching and ordering transactions around the objects associated with such transactions. Instead of a single-lane system, which is the case with most blockchains, Sui can process many groups of transactions at once, making Sui one of the fastest and most scalable blockchains. To read more about scalability and transaction execution, go here.

The names highlight that the components split the responsibilities of:

Narwhal - ensuring the availability of data submitted to Consensus;

Mysteciti - agreeing on a specific ordering of this data.

DAG Data Structure

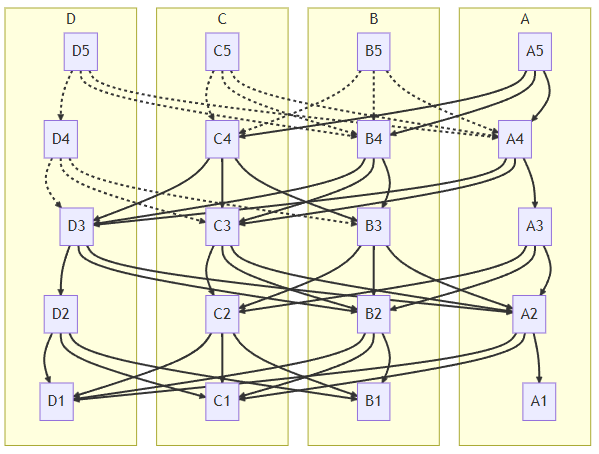

Narwhal arranges transactions in a DAG (Directed Acyclic Graph) data structure, in which transactions are sequenced in consecutive rows at each round and form an acyclic graph, instead of putting in line, one by one. At each round, transactions in the row are selected to be executed. Then, as all rounds pass, these transactions are executed in a batch.

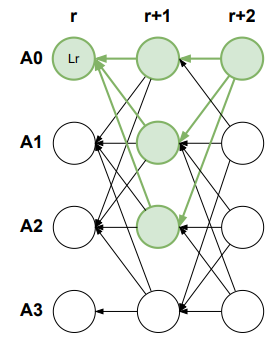

The figure below shows how transactions are ordered and sequenced following the DAG structure.

Narwhal Mempool

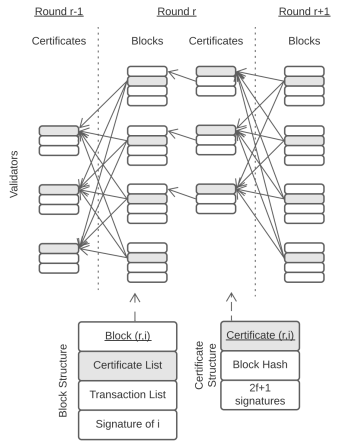

The Narwhal Mempool merges dependable broadcasting and storage. Each validator keeps track of the current local round 𝑟, which starts at zero. Validators continuously accept transactions from clients and compile them into a transaction queue.

They also receive certificates of availability for blocks at 𝑟 and gather them into a certificate list. Once certificates for round 𝑟 − 1 are collected from 2𝑓 + 1 unique validators, a validator advances the local round to 𝑟, forms, and disseminates a block for the new round. Each block encompasses its creator’s identity, local round 𝑟, the present list of transactions and certificates from 𝑟 −1, and a signature from its creator. Accurate validators only generate a single block per round.

Validators dependably broadcast each block they form to ensure its integrity and availability. Essentially, the block creator transmits the block to all validators, who verify its validity and then respond with their signatures. A valid block must:

a) include a valid signature from its creator;

b) be at the local round 𝑟 of the validator verifying it;

c) be at round 0 (genesis) or include certificates for at least 2𝑓 + 1 blocks of round 𝑟 − 1;

d) be the first one received from the creator for round 𝑟.

If a block is valid, the other validators store and acknowledge it by signing its block digest, round number, and creator’s identity. However, blocks with a future round contain 2𝑓 + 1 certificates that ensure a validator progresses its round into the future and signs the newer block. Once the creator receives 2𝑓 + 1 distinct acknowledgments for a block, it combines them into a certificate of block availability that includes the block digest, current round, and creator identity. Then, the creator transmits the certificate to all other validators so that they can incorporate it in their next block.

The system is initialized through all validators forming and certifying empty blocks for round 𝑟 = 0. These blocks do not encompass any transactions and are valid without reference to certificates for past blocks.

The figure below illustrates the Narwhal Mempool operation.

TLDR, the Narwhal Mempool offers:

- a high-throughput data availability engine with cryptographic proofs of data availability at a primary node

- a structured graph data structure for traversing this information

- a scaled architecture, splitting the disk I/O and networking requirements across several workers

Mysteciti Consensus Mechanism

Protocol Overview

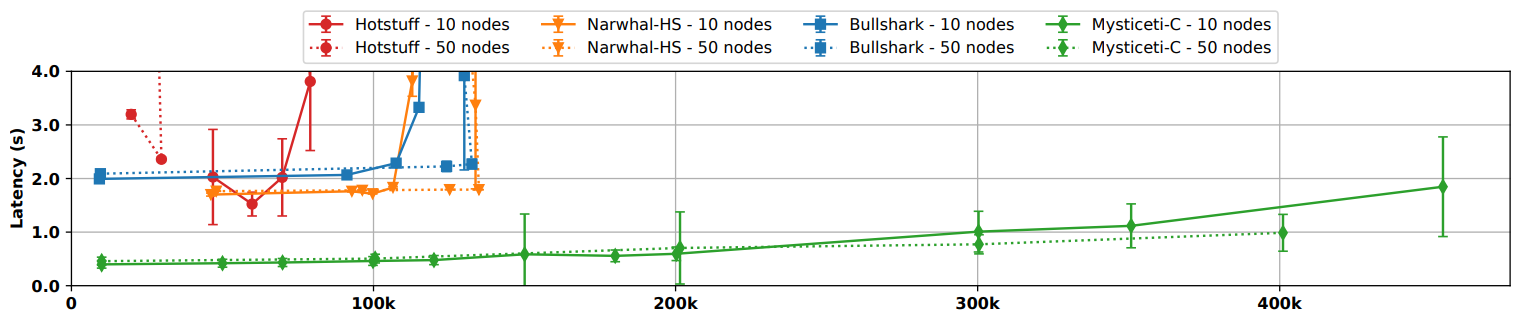

Parallelization has always been the cornerstone of Sui. Initially, Sui used Bullshark as the Consensus Mechanism; however, later, there were found ways to improve its performance by moving to Mysctcity. With Bullshark, the latency was comparably high: about 2-3 seconds. MYSTICETI-C, an uncertified DAG-based protocol, reduces this latency significantly by avoiding multiple round trips and allowing faster block commits. Early BFT consensus protocols like PBFT, while faster, required many authenticated messages, consuming resources and resulting in low throughput.

MYSTICETI-C is a family of DAG-based protocols designed for low-latency and low-CPU operation in Byzantine settings. MYSTICETI-C achieves significant latency reductions by committing blocks independently without explicit certificates. It has been adopted by the Sui blockchain, reducing latency by 80% compared to Bullshark. MYSTICETI-FPC extends MYSTICETI-C to support consensusless transactions in BFT systems, reducing latency and CPU usage by avoiding explicit certificates for each transaction.

In each epoch of a message-passing system with ( n = 3f + 1 ) validators, up to ( f ) validators can be corrupted by an adversary, while the remaining ( 2f + 1 ) honest validators follow the MYSTICETI protocols. These protocols ensure safety and liveness in a partially synchronous network, guaranteeing that no two correct validators commit inconsistent transactions.

Each validator broadcasts messages with a sequence number, and the reliable broadcast abstraction ensures agreement, integrity, and validity among honest validators. Byzantine atomic broadcast adds total order, ensuring consistent message delivery order across all honest validators.

MYSTICETI protocols involve validators proposing unique signed blocks at each round, incorporating transactions and references from prior rounds. Clients can resubmit to another validator if a transaction isn’t finalized in time. For a block to be correct, it must include the author’s signature, a round number, a list of transactions, and at least 2f + 1 distinct hashes from the previous round. A block is valid if the signature is valid, the author is part of the validator set, and all hashes point to distinct valid blocks from previous rounds. Identifying DAG patterns involves checking if a block supports a past block by following the sequence of hashed blocks in a depth-first search.

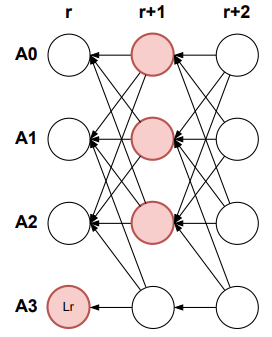

MYSTICETI-C and MYSTICETI-FPC use the DAG structure to make decisions based on two patterns: the skip pattern, where 2f + 1 blocks do not support a proposal, and the certificate pattern, where 2f + 1 blocks support a proposal, ensuring only certified blocks are committed.

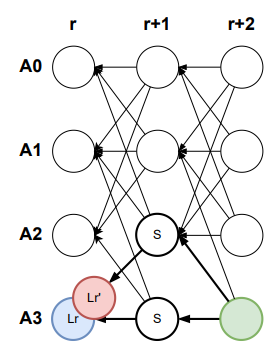

Block (A3, r + 2, ·) (green) may reference blocks from different validators that support both (A3, r, Lr) (blue) and (A3, r, L′

r) (red) equivocating blocks. If any of the blocks gather 2f + 1 support, it will be certified,

and we show that, at most, one may do so.

The figure below illustrates the skip pattern, where blocks (A0, r+1, ·), (A1, r+1, ·), (A2, r+

1, ·) do not support (A3, r, Lr).

The figure below illustrates the certificate pattern; block (A0, r+2, ·) is a certificate for (A0, r, Lr).

To ensure liveness without randomization, MYSTICETI-C relies on timeouts, guaranteeing liveness for one primary block per round after GST by requiring validators to wait for it before proceeding.

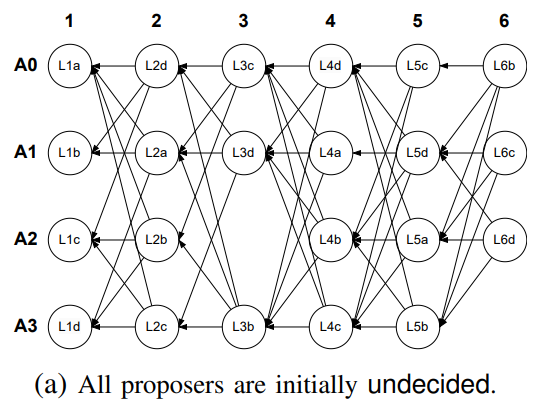

Proposer Slots

MYSTICETI-C is the first DAG-based consensus protocol to decide blocks in 3 message delays by treating every block as a first-class block and foregoing explicit certification. It can instantly identify and exclude crashed validators, the most common failure in blockchains. Additionally, MYSTICETI-C introduces proposer slots, which can be in states like to-commit, to-skip, or undecided, to manage block proposals and mitigate network asynchrony.

The number of proposer slots per round can be configured, and for systems with few faults, it can be set to (n) to allow every block a chance to commit in three steps. A deterministic total order is established among all pending proposer slots, which may remain fixed or change per round. Validators wait for the primary validator’s proposal for a set delay before generating their own proposal for the next round, ensuring the protocol’s liveness.

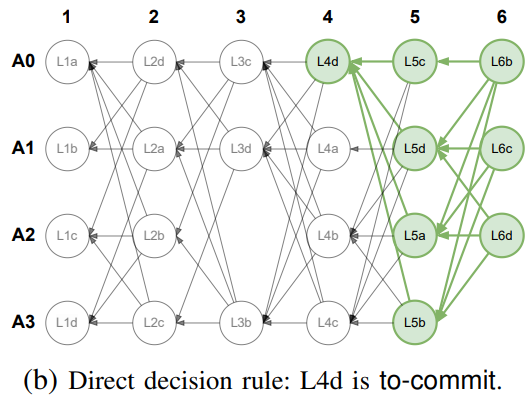

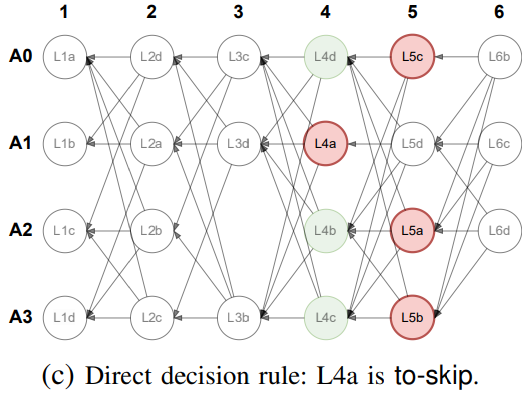

Direct Decision Rule

Initially, all proposer slots are undecided, and the goal is to mark them as either to-commit or to-skip by detecting specific DAG patterns. The direct decision rule operates in three steps, starting with the latest proposer slot and marking it as to-commit if it observes 2f + 1 commit patterns, which helps lower latency by certifying blocks while constructing the DAG.

The figure below shows L4d marked as to-commit in three messages due to these patterns.

If a slot observes a skip pattern, it is marked as to-skip, as shown with L4a in the figure below.

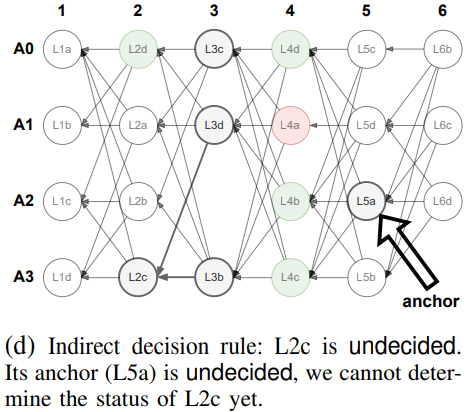

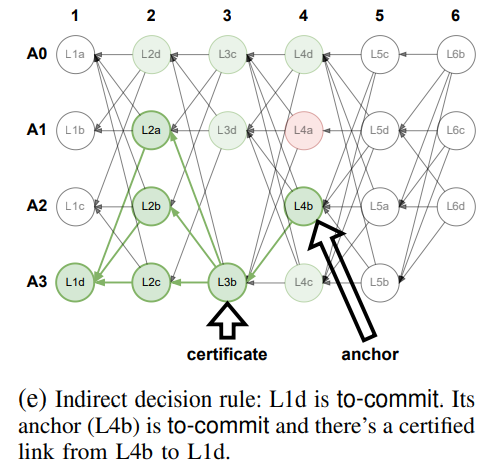

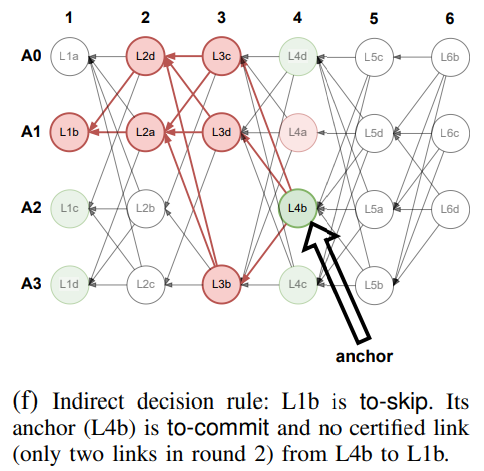

Indirect Decision Rule

If the direct decision rule fails, the validator uses the indirect decision rule, which operates in two stages. First, it searches for an anchor, the first slot with a round number greater than (r + 2) that is marked as either undecided or to-commit. If the anchor is undecided, the slot is marked as undecided; if the anchor is to-commit, the slot is marked as to-commit or to-skip based on the presence of a certificate pattern. This design ensures the safety of MYSTICETI-C by leveraging the predefined total ordering of proposer slots, making commit decisions deterministic.

The figures below illustrate the indirect decision rule.

After processing all slots, the validator creates an ordered sequence and commits slots marked as to-commit while skipping those marked as to-skip, continuing until an undecided slot is found. This ensures safety, as all slots will eventually be classified as to-commit or to-skip. Unlike previous designs, MYSTICETI-C uses undecided slots to maintain safety without impacting performance.

Choosing the Number of Proposer Slots

Assuming the number of proposer slots per round equals the committee size offers optimal latency under normal conditions but can impact performance during extreme asynchrony or Byzantine attacks. In such cases, the validator may need to use the indirect decision rule more often, increasing undecided slots and delaying the commit sequence. To mitigate this, HammerHead selects the best-performing leaders, balancing performance without significantly increasing latency. Section III-D details MYSTICETI-C algorithms that allow configurable proposer slots per round.

Fast-Path Protocol

Hybrid blockchains, like Sui, optimize performance by allowing certain objects, such as coins, to bypass consensus and be finalized quickly through reliable broadcast. In MYSTICETI-FPC, validators include transactions in their blocks if they don’t conflict with previously voted transactions, ensuring fast path safety by requiring 2f+1 votes for certification. This guarantees that no two conflicting transactions are certified in the same epoch.

MYSTICETI-FPC integrates the fast path directly into the DAG structure, embedding validators’ votes within signed blocks produced during consensus. This approach reduces the need for additional protocol messages and simplifies checkpoints by using finalized fast path transactions referenced in the causal history of each MYSTICETI-C commit. As a result, it decreases the number of signature operations, eliminates separate checkpointing mechanisms, and simplifies epoch transitions.

Epoch Change and Reconfiguration

Most quorum-based blockchains operate in epochs, allowing validators to join or leave at these boundaries. Epoch boundaries also help unlock transactions that lost liveness due to client equivocation. The reconfiguration process must ensure that transactions finalized in one epoch persist into the next, avoiding conflicts with future transactions. MYSTICETI-FPC addresses this by including all finalized transactions from the current epoch in the causal history of the final commit, which serves as the starting state for the next epoch.

To solve this challenge, MYSTICETI-FPC addresses the challenge of epoch transitions by introducing an epoch-change bit in its blocks. When set to 1, this bit pauses the finalization of fast path transactions, preventing race conditions near the end of an epoch. Validators stop processing fast-path transactions and set the epoch-change bit to 1, ensuring a smooth transition to the next epoch where blocked transactions can be revisited.

The epoch-change mechanism ensures that transactions finalized in an epoch persist across all subsequent epochs, maintaining critical safety and liveness properties. The liveness of MYSTICETI-FPC depends on MYSTICETI-C, ensuring non-conflicting transactions gather sufficient votes and are included in commits.

Performance

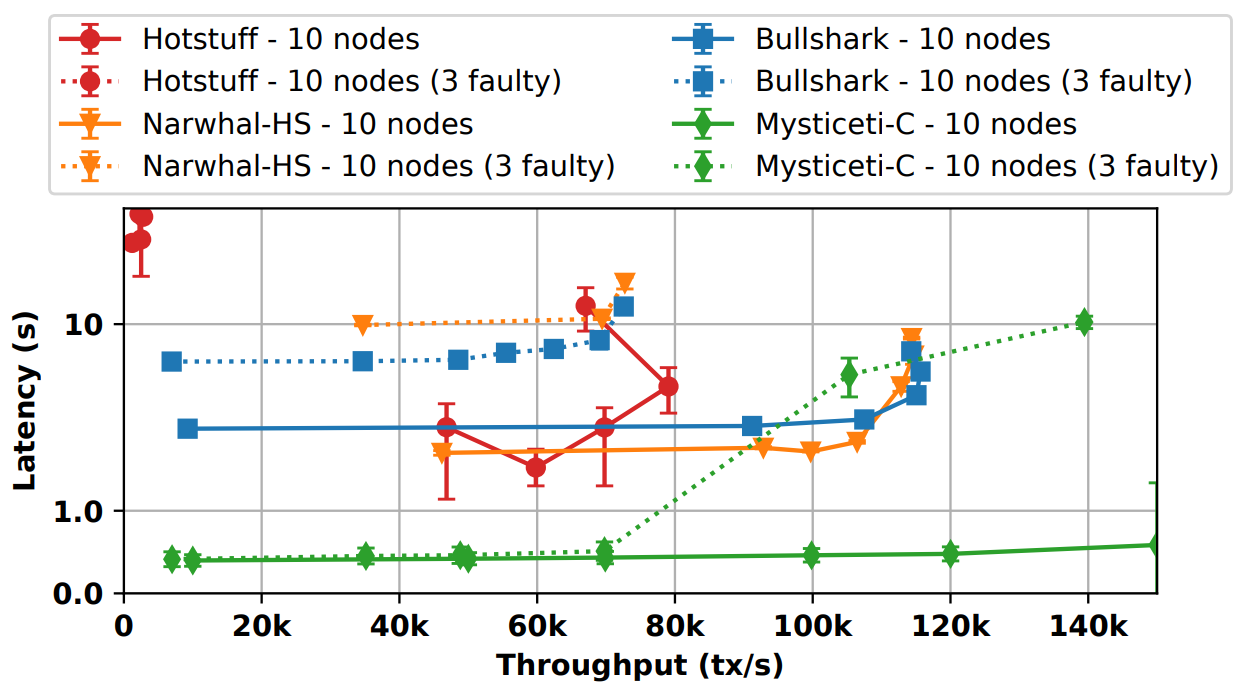

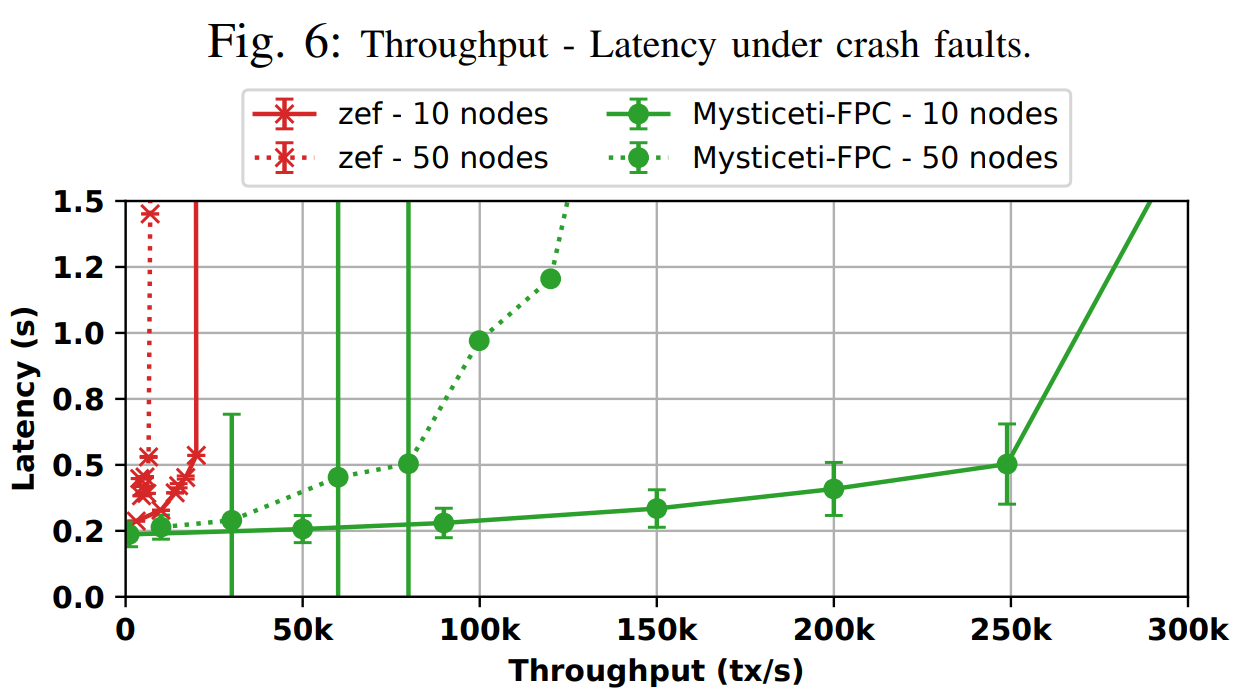

Now let's see how Mysteciti compares to Narwhal-HS, Bullshark, and Hotstuff. The table and the figures below show how they perform in terms of transactions per second and latency in ideal conditions, with faults, and with the fast path arrangements.

Ideal Conditions

| Consensus | TPS, transactions per second | Latency, seconds |

|---|---|---|

| Mysteciti-C (10 nodes) | 10,000-470,000 | 0.4-1.8 |

| Mysteciti-C (50 nodes) | 10,000-400,000 | 0.4-1.0 |

| Bullshark (10 nodes) | 50,000-130,000 | 2.0-4.0 |

| Bullshart (50 nodes) | 50,000-130.000 | 2.1-4.0 |

| Narwhal HS (10 nodes) | 50,000-130,000 | 1.8-4.0 |

| Narwhal HS (50 nodes) | 50,000-130,000 | 1.8-1.9 |

| Hotstuff (10 nodes) | 50,000-70,000 | 1.5-3.8 |

| Hotstuff (50 nodes) | 20,000-30,000 | 3.2-2.3 |

With Faults

| Consensus | TPS, transactions per second | Latency, seconds |

|---|---|---|

| Mysteciti-C (10 nodes) | 10,000-150,000 | 0.4-0.6 |

| Mysteciti-C (50 nodes) | 8,000-140,000 | 0.4-1.0 |

| Bullshark (10 nodes) | 9,000-115,000 | 4.5-8.0 |

| Bullshart (50 nodes) | 7,000-73,000 | 7.0-12.0 |

| Narwhal HS (10 nodes) | 45,000-115,000 | 3.0-9.0 |

| Narwhal HS (50 nodes) | 35,000-72,000 | 10.0-14.0 |

| Hotstuff (10 nodes) | 48,000-79,000 | 2.5-12 |

| Hotstuff (50 nodes) | 1,000-2,500 | 14.0-16.0 |

| Consensus | TPS, transactions per second | Latency, seconds |

|---|---|---|

| Mysteciti-FPC (10 nodes) | 0-280,000 | 0.2-1.5 |

| Mysteciti-FPC (50 nodes) | 0-148,000 | 0.2-1.5 |

| zef (10 nodes) | 0-30,000 | 0.25-1.5 |

| zef (50 nodes) | 3,000-5,000 | 0.35-1.45 |

Updated 3 months ago